I had a fascinating conversation with Adam Sapek on Wednesday about software, aesthetics, and mental models. He posed this question: Do we use elegance (say) as design criterion because it necessarily leads to better software, or simply because it helps us to think about it better?

Aesthetic criteria seem to help humans make better software [1]. It is quite possible that a sense of what’s beautiful in software is common among all humans, just as there seems to be a neurological basis for visual aesthetics [2].

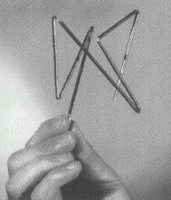

But what about software is developed by aliens, e.g. computers themselves? Genetic algorithms, for example, might yield a better solution, but one that is “ugly” in human terms. Genetic algorithms sometimes come up with “weird” and “counter-intuitive” solutions that are better than ours [3], such as unusual, highly asymmetric orbit configurations for multi-satellite constellations that minimize losses in coverage; unusually shaped radio antennas (illustrated above); and a solution to a problem in cellular automata theory that not only outperformed any of the human-created solutions devised over the last two decades, but that was also qualitatively different.

As Steven Vogel argues in Cats’ paws and catapults, natural design and human engineers arrive at very different solutions to the same problems, while relying on similar engineering principles. To pick a few examples: Right angles are rare in nature, but common in human technologies; nature builds wet and flexible structures, we go for dry and stiff; nature’s hinges mainly bend, and ours mainly slide.

It may turn out that our instinctive aesthetics for software will diverge from the systems on which our systems run. For example, concurrent programming seems to be very hard for humans; our criteria for “good software” may not work very well for many-processor architectures. We may be able to build tools that come up with good solutions, but we won’t have an intuitive grasp of why they’re good. Computer science may then find itself in a crisis of intelligibility like the one physics encountered over the interpretation of quantum mechanics a century ago.

--------

[1] Don Knuth was an early proponent of aesthetics in software, as in his “literate programming” initiative. According to Gregory Bond in “Software as Art,” Communications of the ACM, August 2005/Vol. 48, No. 8, p. 118, Knuth identified the following as properties of software that inspire pleasure or pain in its readers: correctness, maintainability, readability, lucidity, grace of interaction with users, and efficiency. Charles Connell proposes that all beautiful software has the following properties: cooperation, appropriate form, system minimality, component singularity, functional locality, readability, and simplicity.

[2] Zeki and Kawabata (Journal of Neurophysiology 91: 1699-1705, 2004) found using functional MRI scans that the perception of different categories of paintings (landscapes, still lives, portraits) are associated with distinct and specialized visual areas of the brain, and that parts of the brain are activated differently when viewing pictures subjects self-identified as beautiful or ugly. For more papers on neuroesthetics, see http://www.neuroesthetics.org/research/index.html.

[3] These examples were taken from Adam Marczyk’s Genetic Algorithms and Evolutionary Computation. It provides a useful inventory of GA applications, seemingly unaffected by polemical goal of the paper to rebut claims of creationists about genetic algorithms and biological evolution.

Thank you for reading, and thank you for your feedback, both in the blog comments, and privately.

Thank you for reading, and thank you for your feedback, both in the blog comments, and privately.