The overruns in software projects demonstrate that our intuitions can be weak for knowledge goods. Our world is being filled with things our brains are not prepared for evolutionarily. Software is a prime example, and the frequent failures of large software projects is a symptom of a deeper problem than lack of project management skills.

This is an example of the class of ‘innately hard problems’, which our evolutionary heritage has not equipped us to solve instinctively. They’re different from 'intrinsically' hard problems like the

halting problem in computer science, that is, problems which are hard to solve whether a human is involved or not.

Why we struggle to reason about softwareLakoff and Johnson [1] make a persuasive case that our methods of reasoning are grounded in our bodies, and our evolutionary history. Human artifacts which are not constrained by the physical natural world can confound our intuitions. It doesn’t mean we can’t build them, reason about them, or use them, but it does mean that we have to wary of assuming that our instincts about them will be correct.

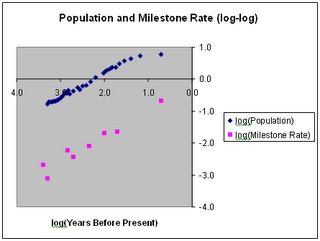

Software is a good example of such an artifact. (Others include lotteries, quantum mechanics, exponential growth, very large or very small quantities, and financial markets.) Software is intangible and limitless [2] [3], and is built out of limitless intangibles – the ideas of programmers, and other pieces of software. Software modules can ‘call’ one another, ie delegate work by passing messages, essentially without limit. All these components become dependent on one another, and the permutations of who can call whom are unimaginably huge. Contrast this with a mechanical device, where the interactions between pieces is constrained by proximity, and are thus severely limited in number.

Software project overrunsSoftware projects are subject to overruns of mythic proportions, more so than other engineering tasks [4].

IEEE Spectrum devoted its

September 2005 issue to software failures. It leads with a

story on how the FBI blew more than $100 million on case-management software it will never use. In his

review article, Robert Charrette summarizes the long, dismal history of IT projects gone awry. He mentions the giant British food retailer J Sainsbury PLC which wrote off its $526 million investment in an automated supply-chain management system last October. Back in 1981, to pick another example, the U.S. Federal Aviation Administration began looking into upgrading its antiquated air-traffic-control system. According to Charrette, the effort to build a replacement soon became riddled with problems; by 1994, when the agency finally gave up on the project, the predicted cost had tripled, more than $2.6 billion had been spent, and the expected delivery date had slipped by several years.

Charrette continues: “Of the IT projects that are initiated, from 5 to 15 percent will be abandoned before or shortly after delivery as hopelessly inadequate. Many others will arrive late and over budget or require massive reworking. Few IT projects, in other words, truly succeed.” He closes with this telling assessment: “Most IT experts agree that such failures occur far more often than they should. What's more, the failures are universally unprejudiced: they happen in every country; to large companies and small; in commercial, nonprofit, and governmental organizations; and without regard to status or reputation.”

IT failures happen more often than expected, everywhere, to everyone … There is something fundamental going on here.

Why do software projects fail?In

Waltzing with Bears, Tom Demarco and Timothy Lister boil software project risks down to five things: inherent schedule flaws, requirement creep, employee turnover, specification breakdown, and poor productivity. However, there’s nothing special about software here; these problems apply to any engineering project [5]. Further, they don’t explain why software failures are so much more dramatic than in other engineering disciplines.

The underlying reason for the surprising failure of large software projects is that the embodied intuitions of even the best managers, even after decades of experience, fail to the match the nature of what’s being managed.

Our mental models of projects are for building physical things. Toddlers play with building blocks, kids with Lego; even software games emulate physical reality. However, a physical structure and a piece of software are different in their degree of interconnectedness. If an outside wall of my house fails, it’s unlikely to bring down more than one corner. All the pieces of the house are connected, certainly, but their influence on each other decrease with distance. Large pieces of software consist of modules that are combined in infinite permutations: not only is the number large, one cannot predict at design time in what order the combinations will occur. Any module is instantly “reachable” from any other module. Here’s Robert Charette in IEEE again:

“All IT systems are intrinsically fragile. In a large brick building, you'd have to remove hundreds of strategically placed bricks to make a wall collapse. But in a 100 000-line software program, it takes only one or two bad lines to produce major problems. In 1991, a portion of AT&T’s telephone network went out, leaving 12 million subscribers without service, all because of a single mistyped character in one line of code.”

The failure is also omni-directional because of the non-physical interconnectedness of software systems. If the ground floor of a building is damaged, the whole building may collapse; however, one can remove the top floor without affecting the integrity of the rest of the structure. There is no force of gravity in software systems: errors can propagate in any direction.

Testing is the bottleneck in modern software systems development, and derives from the permutation problem. It’s easy to write a feature; but getting it tested as part of the whole may make it impossible to ship. Eric Lippert’s famous

How many Microsoft employees does it take to change a lightbulb? explains how an “initial five minutes of dev time translates into many person-weeks of work and enormous costs.” While there are research projects in computer science to use advanced mathematics to make the permutation problem tractable, I don’t know of any that have proven their worth in shipping large code bases.

Comparisons with other branches of engineeringThe success of silicon chip engineering shows that it’s not simply the exponential growth in the number of components that makes software engineering so unpredictable. Transistor density has been growing exponentially on silicon chips for decades. While fabrication plants are becoming fabulously complex, schedule miscalculations are far less severe than with software; slips are typically measured in quarters, not years. Software visionaries love to invoke Moore’s Law when talking about the future, but Moore was talking about hardware. Software’s ‘bang for the buck’ has not grown exponentially. When you next get a chance, ask your favorite software soothsayer why there’s no Moore’s Law for software.

Another telling characteristic of software slips is that they happen in the design phase. In other engineering projects, delays typically happen in production, not in design. Software is, of course, all design, all the time. Other engineering fields have robust models for the failure modes of their materials; we don’t have the equivalent models for ideas, the ingredients of software.

Saying that software engineering is still in its infancy isn’t an adequate explanation; it’s been going for at least

fifty years. The SAGE early warning missile system was fully deployed in 1962, and led directly to the development of the SABRE air travel reservation system and later air traffic control systems. When SABRE debuted in 1964, it linked 2,000 terminals in sixty cities.

In sum: Physical metaphors of construction don’t apply to software. However, they’re all we have, when we think intuitively. Smart people can invent abstract tools, and we can learn heuristics to calculate answers, just as we can do in quantum mechanics. That doesn’t mean we understand either of them in our bones.

Update 3 Jan 05: fixed 'innately' for 'intrinsically' typo in second paragraph; thanks, S.

----- Notes -----

[1] Lakoff and Johnson’s

Metaphors We Live By (1980) first brought this perspective to public attention. Their more recent

Philosophy in the Flesh (1999) uses their notions of “embodied mind” to analyze systems of philosophical thought.

[2] Money is intangible, but limited in the sense that its ownership is a zero-sum game; either you have the money, or I do.

[3] Your ownership and use of a piece of software doesn’t impose any constraint on my enjoyment of it. This is only strictly true of “local software”. Software that is “hosted”, eg running on a central server and accessed by people across a network, is shared among all its users, and if there is sufficient demand your use of the software can impact mine. In both cases, though, the software is constructed out of other pieces of software, a compounding of intangibles.

[4] There is a good anecdotal evidence that software projects unravel more messily than other kinds. However, it’s hard to imagine how one could prove this statement, since a comparison would need detailed histories of all projects in at least two engineering fields. Building the histories would require the revelation of a great deal of sensitive information, whether by companies or governments.

[5] Lorin May provides a list of ten

Major Causes of Software Project Failures in

Crosstalk: The Journal of Defense Software Engineering. Wikipedia has a list of

criticisms of software engineering, with rebuttals.